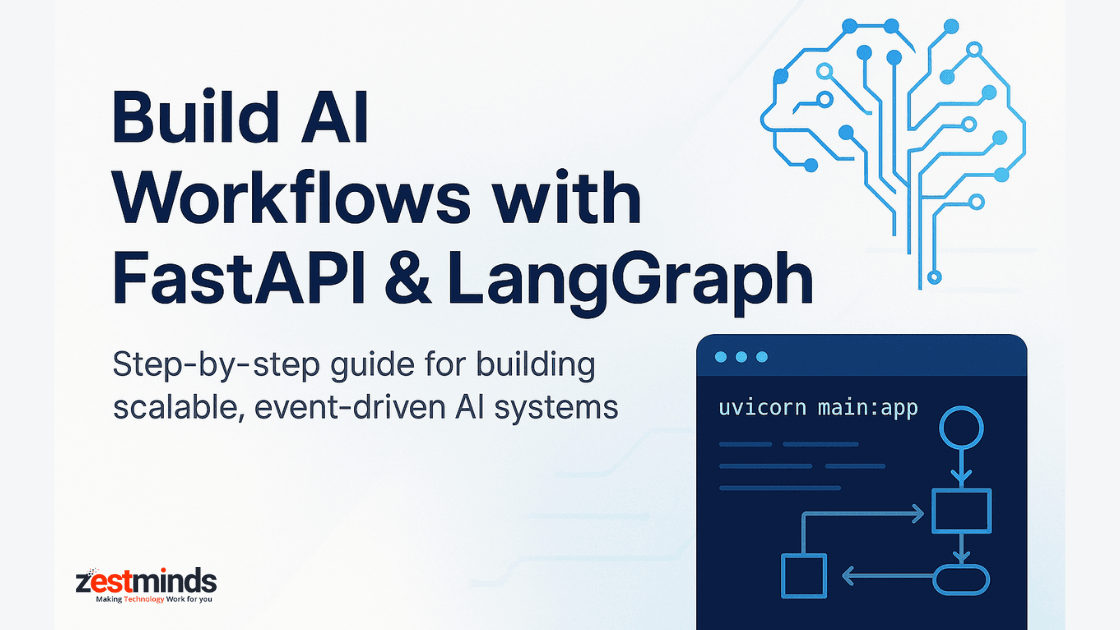

Build AI Workflows with FastAPI & LangGraph: Step-by-Step Guide for 2025

Want to build smarter, scalable AI systems with real-time agents and reliable logic flows?

This guide walks you through the entire process: planning, building, integrating, and deploying AI workflows using LangGraph's stateful agent graph and FastAPI's high-performance backend.

Ideal for CTOs, AI developers, startup founders, and tech leads looking to orchestrate modular, reliable AI systems with OpenAI, vector DBs, and real-time agents.

What You'll Learn

- What LangGraph is and how it differs from LangChain

- Why FastAPI + LangGraph is a powerful stack for modern AI workflows

- How to build and orchestrate your first LangGraph agent

- Best practices for production-ready AI pipelines

- Deployment tips with Docker & cloud services

- Real-world use cases and performance considerations

Why LangGraph Is a Game Changer

"LangGraph feels like the React of AI agents. It helps you reason about flow, state, and structure, without losing your mind."

LangGraph is a stateful, graph-based orchestration library for AI agents. Think of it as the missing piece between your LLMs (like OpenAI) and real-world applications.

It solves 3 key problems most AI teams face:

- Memory + Context Handling: Manage state across multiple calls

- Flow Control: Define retry logic, conditional steps, branches

- Multi-Agent Workflows: Build coordination between AI + tools + humans

Unlike LangChain, which abstracts workflows as chains or sequences, LangGraph introduces a finite-state-machine-like architecture, giving you better control and visibility.

Learn more on the LangGraph Documentation.

Why Pair LangGraph with FastAPI?

While LangGraph handles AI orchestration, FastAPI takes care of the backend interface, APIs, and performance.

Together, they make a dream stack for:

- Building fast, async-ready endpoints

- Managing user inputs, sessions, or tasks

- Deploying as microservices or monoliths

- Seamlessly integrating LangGraph agents via HTTP or background jobs

"Imagine LangGraph as the brain coordinating multiple agents. FastAPI is the nervous system—fast, reactive, and structured—that lets the brain interact with the world."

What You'll Build

We'll build a simple customer support AI agent that:

- Accepts user input via a FastAPI endpoint

- Passes data to a LangGraph workflow

- Uses an OpenAI agent to draft a response

- Routes complex queries to human support

- Logs conversation in a vector DB for retrieval

Step 1: Install Your Stack

Use pip to install required libraries:

pip install langgraph fastapi uvicorn openai weaviate-clientIf you're using Docker (recommended for prod), prep your Dockerfile and .env with API keys for OpenAI and Weaviate.

Step 2: Define Your FastAPI App

from fastapi import FastAPI, Request

from your_langgraph_module import run_agent_workflow

app = FastAPI()

@app.post("/support")

async def support_handler(request: Request):

data = await request.json()

response = run_agent_workflow(data['query'])

return {"reply": response}

FastAPI handles the HTTP layer and routes the input to the LangGraph-powered logic.

Step 3: Define LangGraph Workflow

from langgraph.graph import StateGraph

from agents import openai_agent, human_escalation

workflow = StateGraph()

workflow.add_node("LLM", openai_agent)

workflow.add_node("Human", human_escalation)

workflow.set_entry_point("LLM")

workflow.add_edge("LLM", condition_fn, "Human")

workflow.add_terminal_node("Human")

graph = workflow.compile()

We define a graph of states and add a condition function to determine routing. Nodes can include Search, Retrieval, Analytics, etc.

Step 4: Test Locally with Uvicorn

uvicorn main:app --reloadHit the /support endpoint using Postman or curl:

curl -X POST http://localhost:8000/support -H "Content-Type: application/json" -d '{"query":"Can I cancel my order?"}'Step 5: Dockerize and Deploy

Here's a simple Dockerfile:

FROM python:3.11-slim

COPY . /app

WORKDIR /app

RUN pip install -r requirements.txt

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]

You can deploy to Render, Railway, or AWS ECS using this setup. Store your keys securely using environment variables.

Pro Tips for Production

- Use Weaviate or Pinecone for vector search

- Integrate LangSmith for observability

- Add retries and circuit-breakers in LangGraph nodes

- Use FastAPI's BackgroundTasks for async workflows

- Log transitions using Prometheus or Grafana

Real-World Use Cases

- AI-Powered Support Desk: Route tickets to LLMs, knowledge base, or human agents

- Lead Qualification Chatbot: Score leads and sync with your CRM like HubSpot

- Generative Marketing Assistant: Auto-draft content based on inputs and templates

Real-World Example: AI-Powered Support Desk in Healthcare

We've implemented a real-world version of this use case in a HIPAA-compliant hospital system, where an AI agent triages patient support tickets and routes them to either medical knowledge bases or human staff.

Read the full case study: AI-Powered Support Desk for a Hospital System

Performance at Scale

LangGraph supports determinism and concurrency by design. For scalability:

- Use Gunicorn + Uvicorn workers for FastAPI

- Use Redis queues for distributed task execution

- Measure latency across graph paths and optimize bottlenecks

Ready to Build an AI Workflow That Scales?

At Zestminds, we help startups, CTOs, and innovation teams turn ideas into production-ready AI systems using:

- LangGraph agent workflows

- FastAPI microservices

- OpenAI, Weaviate, and other LLM tools

- End-to-end DevOps & deployment

Schedule Your Free AI Architecture Session

Also Read

- AI Development Services at Zestminds

- AI MVP Planning Checklist for Startup Founders

- How We Built an AI-Powered Ride Sharing Platform

- Build Scalable Web Apps with Node.js + Microservices

FAQs

What is LangGraph and how is it used in AI development?

LangGraph is an orchestration library that lets developers define modular, stateful AI agent workflows. It's ideal for building complex systems involving LLMs, tools, and human-in-the-loop logic.

Why should I use FastAPI with LangGraph?

FastAPI is a high-performance Python framework for building APIs. When paired with LangGraph, it offers an ideal stack for AI workflows—blending speed, structure, and flexibility.

How can I deploy LangGraph + FastAPI apps?

Use Docker for containerization, Uvicorn + Gunicorn for scaling, and deploy to platforms like AWS, Railway, or Render. For observability, tools like LangSmith and Prometheus are highly recommended.

Shivam Sharma

About the Author

With over 13 years of experience in software development, I am the Founder, Director, and CTO of Zestminds, an IT agency specializing in custom software solutions, AI innovation, and digital transformation. I lead a team of skilled engineers, helping businesses streamline processes, optimize performance, and achieve growth through scalable web and mobile applications, AI integration, and automation.

Stay Ahead with Expert Insights & Trends

Explore industry trends, expert analysis, and actionable strategies to drive success in AI, software development, and digital transformation.