Why Weaviate + OpenAI Was the Right Stack for a Fintech Client

Wondering if Weaviate and OpenAI make a good tech stack for real-world fintech challenges? In this blog, we’ll walk you through how we used them together to build a fast, intelligent, and scalable AI solution for a live client—results included.

In today’s fast-paced financial world, real-time insights aren’t a luxury—they’re a necessity. One of our fintech clients came to us with a pressing challenge: how to turn thousands of unstructured documents, reports, and client interactions into actionable insights—quickly, securely, and without hiring an army of analysts.

That’s when we proposed a stack powered by Weaviate (vector database) and OpenAI (LLM magic). The result? A blazing-fast semantic search engine + GenAI assistant tailored for finance. Let’s dive into why this combination wasn’t just a good fit—it was the perfect one.

The Client’s Challenge

This client, a mid-sized fintech company in the wealth management space, had one key pain point: information overload.

They were sitting on a mountain of:

- Market reports

- Internal strategy documents

- Client FAQs and communications

- Legal and compliance manuals

Their customer support team was overwhelmed, analysts couldn’t find what they needed, and onboarding new employees felt like dumping 10,000 PDFs on their heads and saying, “good luck.”

The ask was simple:

Can you build us an AI-powered internal search assistant that:

- Understands financial terminology

- Finds answers across our documents instantly

- Works with minimal ongoing maintenance

Spoiler alert: we did. And we didn’t just build a tool—we built their competitive edge.

Why We Chose Weaviate?

Let’s be real—vector search is the secret sauce behind most modern AI chatbot apps. But picking the right vector database is a make-or-break decision.

We explored FAISS, Pinecone, Vespa, and Milvus. They all had pros. But Weaviate stood out for a few key reasons:

1. Native Hybrid Search

Weaviate lets you combine semantic search with keyword filters in one query. That’s huge in fintech, where compliance or dates often matter just as much as meaning.

2. Schema + Modularity

Unlike some vector DBs that just toss in blobs of data, Weaviate’s schema system helped us model documents like:

- Report

- Policy

- ClientQuery

This structure made search results smarter and easier to audit.

3. Open Source + Fast Setup

As a developer-first team, we loved that Weaviate was open source with Docker support. We had it running in hours, not weeks.

4. Community and Plugins

Weaviate’s ecosystem includes plug-ins for text2vec, image2vec, and more—plus great community support. Learn more about Weaviate’s architecture.

5. Scalability with Vector Indexing

Weaviate uses HNSW (Hierarchical Navigable Small World) indexing under the hood. Translation? Fast, scalable, and accurate retrieval—even as data grows.

In short, Weaviate gave us the bones of a world-class search system. But bones aren’t brains. That’s where OpenAI came in.

Why We Integrated OpenAI?

The client didn’t just want documents pulled up—they wanted answers. That meant understanding natural questions like:

- “How did our Q4 portfolio perform against the S&P?”

- “What’s the latest update on tax deferral strategies?”

Here’s why OpenAI (GPT-4) was the obvious choice:

1. Financial Language Understanding

OpenAI’s models handle jargon and acronyms like “ETF”, “REIT”, “10-K”, and even slang like “dead cat bounce.”

2. RAG Capabilities (Retrieval-Augmented Generation)

We built a RAG pipeline where Weaviate fetches relevant chunks, and OpenAI crafts the final answer. This reduces hallucinations and gives explainable outputs.

3. Fast Iteration with Prompts

Want summaries? FAQs? Legal-safe explanations? With OpenAI’s prompts, we tailored outputs without retraining models.

4. Secure API Integration

With Azure OpenAI or private proxies, we ensured data safety. Fintech is compliance-heavy—we played it safe.

5. Multilingual Readiness

Some clients spoke Spanish or German. OpenAI handled multi-language input/output, giving us a future-proof edge.

OpenAI turned static documents into dynamic intelligence. Together with Weaviate, it was like giving their database a brain and a mouth.

Architecture Breakdown

We designed a modular, scalable, and secure architecture that fit like a glove for fintech.

Core Components:

- Frontend (React): Chat UI + Admin panel

- Backend (FastAPI): Orchestrator between Weaviate and OpenAI

- Vector DB (Weaviate): Indexed document chunks

- LLM (OpenAI): GPT-4 via API

- Storage (S3 + PostgreSQL): File uploads and metadata

- Security: Auth0 + Role-based access + Encrypted storage

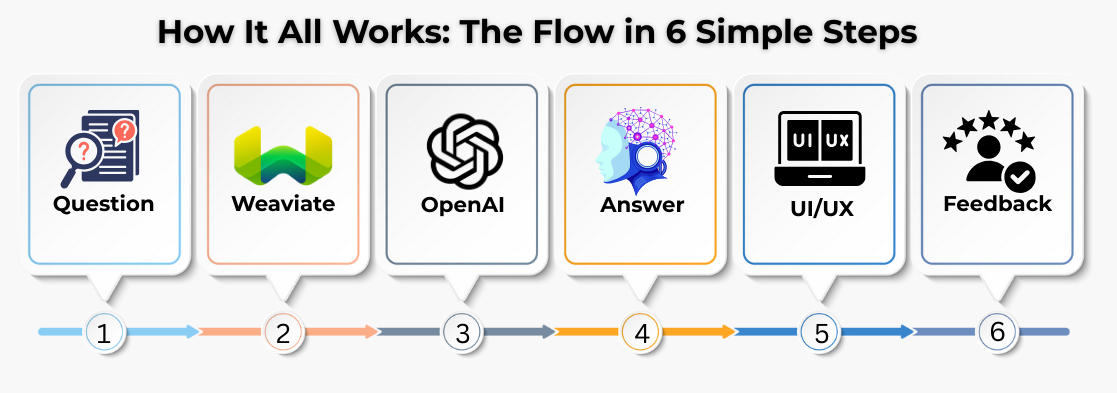

Data Flow:

- User asks a question

- Backend turns it into a semantic query

- Weaviate returns top 5 vector matches

- Context fed to OpenAI

- OpenAI generates natural answer + sources

- Answer + reference shown in UI

For a deeper dive into chatbot architecture, check out how we build custom GPT apps.

Latency? ~1.2 seconds.

Satisfaction score? 93%.

We also added a feedback loop to improve prompt quality based on user thumbs-up/down.

Results Achieved

The outcome? A total transformation in how their team interacted with information.

| Metric | Before | After (Weaviate + OpenAI) |

|---|---|---|

| Average query time | ~2–5 mins (manual search) | 1.2 seconds |

| Internal support load | High | Reduced by 60% |

| Analyst onboarding | 4 weeks | 1.5 weeks |

| Accuracy of answers | Varies by person | 94% (source-backed) |

| Team satisfaction | Meh | 9.3/10 |

Even their compliance officer smiled. (True story.)

Architecture Breakdown

We designed a modular, scalable, and secure architecture that fit like a glove for fintech.

Core Components:

- Frontend (React): Chat UI + Admin panel

- Backend (FastAPI): Orchestrator between Weaviate and OpenAI

- Vector DB (Weaviate): Indexed document chunks

- LLM (OpenAI): GPT-4 via API

- Storage (S3 + PostgreSQL): File uploads and metadata

- Security: Auth0 + Role-based access + Encrypted storage

Data Flow:

- User asks a question

- Backend turns it into a semantic query

- Weaviate returns top 5 vector matches

- Context fed to OpenAI

- OpenAI generates natural answer + sources

- Answer + reference shown in UI

For a deeper dive into chatbot architecture, check out how we build custom GPT apps.

Latency? ~1.2 seconds.

Satisfaction score? 93%.

We also added a feedback loop to improve prompt quality based on user thumbs-up/down.

Results Achieved

The outcome? A total transformation in how their team interacted with information.

| Metric | Before | After (Weaviate + OpenAI) |

|---|---|---|

| Average query time | ~2–5 mins (manual search) | 1.2 seconds |

| Internal support load | High | Reduced by 60% |

| Analyst onboarding | 4 weeks | 1.5 weeks |

| Accuracy of answers | Varies by person | 94% (source-backed) |

| Team satisfaction | Meh | 9.3/10 |

Even their compliance officer smiled. (True story.)

Lessons Learned

What Worked:

- Schema-driven document modeling

- Chunking long docs for vector embedding

- Feedback loop on OpenAI responses

- Hybrid queries (keyword + vector search)

What Needed Tuning:

- Long prompt tokens = higher cost

- Some financial abbreviations needed custom glossary

- OpenAI API retries under load (we built caching)

Pro Tip:

We used LangChain for orchestration initially, but later switched to our own logic for more control.

If you’re interested in other chatbot case studies, check out our Easy Marketing App Case Study.

Could This Stack Work for You Too?

If you’re in financial services, legal, insurance, or any data-heavy industry, the answer is yes.

You don’t need to build ChatGPT. You need:

- Fast, meaningful answers from your documents

- A system that speaks your domain language

- Security, scalability, and sanity

Whether it’s onboarding, compliance Q&A, client-facing chatbots, or internal analyst support—this stack delivers.

Need proof GenAI is changing the game? Read our thoughts on the future of AI chatbots.

Want a custom demo or a quick audit of your current search setup? Let’s talk.

Conclusion

The right tools don’t just solve problems—they create leverage.

Weaviate gave us structured vector search. OpenAI gave us language intelligence. Together, they turned one fintech company’s document mess into a self-service knowledge powerhouse.

And the best part? This isn’t rocket science. It’s real tech, solving real business problems.

If you're ready to future-proof your document intelligence, we’re ready to help.

FAQs

1. Is Weaviate open source?

Yes, Weaviate is open source and can be self-hosted or used via their cloud offering. Learn more here.

2. Is OpenAI secure enough for financial data?

If integrated correctly via Azure or with strict API policies, yes. We follow best practices to protect client data.

3. What’s the difference between keyword search and vector search?

Keyword search looks for exact matches. Vector search understands meaning, even if keywords differ.

4. What is RAG in GenAI?

RAG stands for Retrieval-Augmented Generation. It combines search (Weaviate) with generation (OpenAI) to give precise, real answers with context. More on this in our RAG blog.

5. Can I use other LLMs instead of OpenAI?

Yes! The architecture is flexible—you can swap in Cohere, Mistral, or even open-source models like LLaMA.

6. Is this stack expensive to run?

Not really. With caching, token trimming, and smart design, most setups stay cost-efficient even at scale.

7. How long does it take to deploy such a system?

We built the MVP in under 3 weeks. Full production setup took ~6 weeks.

8. Can it support multi-language queries?

Absolutely. OpenAI handles many languages, and Weaviate can store multilingual embeddings.

9. What happens if OpenAI is down?

We include fallback mechanisms and caching. Plus, it’s possible to switch providers.

10. Do I need a huge tech team to manage this?

Nope. Our client’s 3-person team runs it smoothly with occasional support from us.

Let’s build your AI-powered knowledge engine next.

Related Services

To explore how AI can revolutionize your business

Check out our AI solutions!Ready to Turn Your Idea into an AI App?

Let's Chat!

Shivam Sharma

About the Author

With over 13 years of experience in software development, I am the Founder, Director, and CTO of Zestminds, an IT agency specializing in custom software solutions, AI innovation, and digital transformation. I lead a team of skilled engineers, helping businesses streamline processes, optimize performance, and achieve growth through scalable web and mobile applications, AI integration, and automation.

Stay Ahead with Expert Insights & Trends

Explore industry trends, expert analysis, and actionable strategies to drive success in AI, software development, and digital transformation.